|

I am a senior undergraduate majoring in Computer Science at California Institute of Technology (Caltech), with a minor in Robotics. 宋沛洋 / Email / CV / Bio / Google Scholar / GitHub / LinkedIn / Twitter |

|

|

[Jan 2026] Our paper on Large Language Model Reasoning Failures is accepted to TMLR with a Survey Certification. |

|

I build agentic reasoning systems: AI agents that can plan, act, verify, adapt, and improve over time. My work centers on making reasoning both capable and dependable, across formally verified environments and open-ended natural language. A recurring theme is leveraging structure—often neuro-symbolic—to provide control, interpretability, and reliability in increasingly autonomous systems. (1) Advancing formal reasoning from static models to adaptive agents. In theorem proving, correctness is verifiable—but effective agency is hard. My work advances formal reasoning systems by enabling learning through environment and data infrastructures [LeanDojo], bringing learned capabilities back into the prover via neural-assisted automation [Lean Copilot] and human-centered tooling [Human-AI Formalization], and more recently moving beyond static prediction toward adaptive agents [Adaptation] that operate over evolving libraries [LeanAgent] and long-horizon contexts [LeanProgress]. (2) Diagnosing and strengthening reasoning in natural language. Outside formal systems, correctness guarantees disappear—so reliability must come from principled diagnosis. My work takes a human-grounded, failure-driven approach: connecting classic cognitive phenomena to modern LLM behavior [A-Not-B], examining context sensitivity and cultural fairness in language [Idioms], extending from individual failure modes to questioning foundational assumptions in behavioral evaluation [Personality Illusion] and developing a systematic framework for understanding and mitigating LLM reasoning failures [Reasoning Failures]. (3) Enhancing the efficiency of reasoning systems. I complement algorithmic advances with a system-level perspective, developing architectural and temporal arithmetic techniques for efficient computation [Delay Space] [DelayNet]. My long-term goal is to build AI systems whose reasoning is as creative as human intuition and as dependable as formal logic. |

|

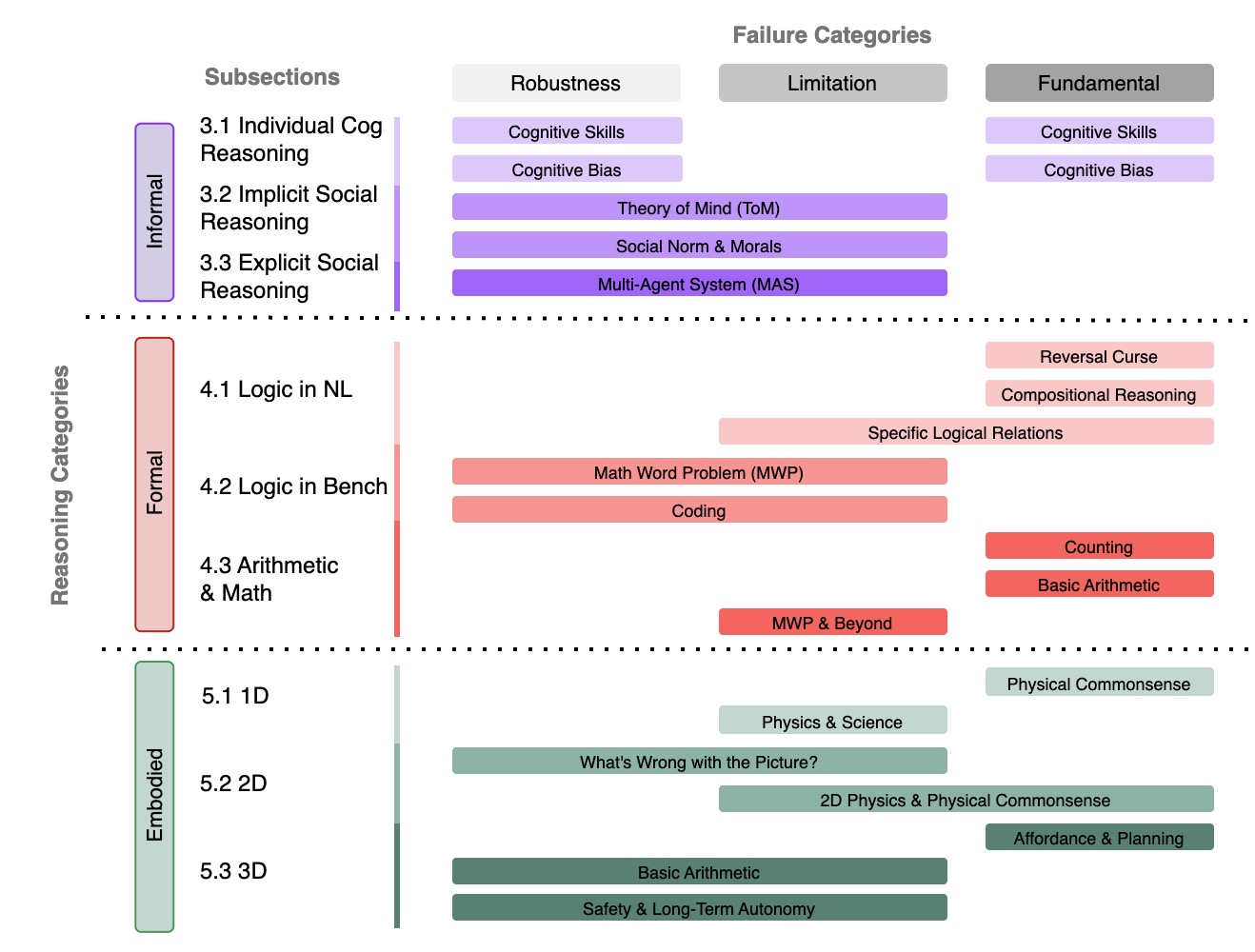

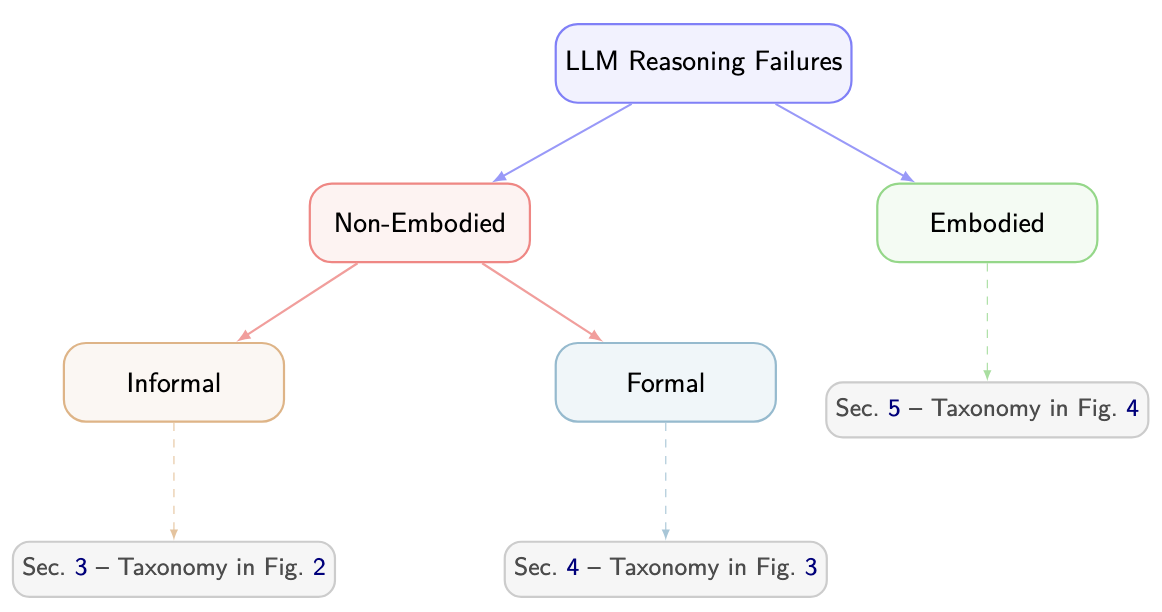

Peiyang Song*, Pengrui Han*, and Noah Goodman (* Equal Contribution) Transactions on Machine Learning Research (TMLR), 2026, Survey Certification arXiv / code / proceeding / media We present the first comprehensive survey dedicated to reasoning failures in LLMs. By unifying fragmented research efforts, our survey provides a structured perspective on systemic weaknesses in LLM reasoning, offering valuable insights and guiding future research towards building stronger, more reliable, and robust reasoning capabilities. |

|

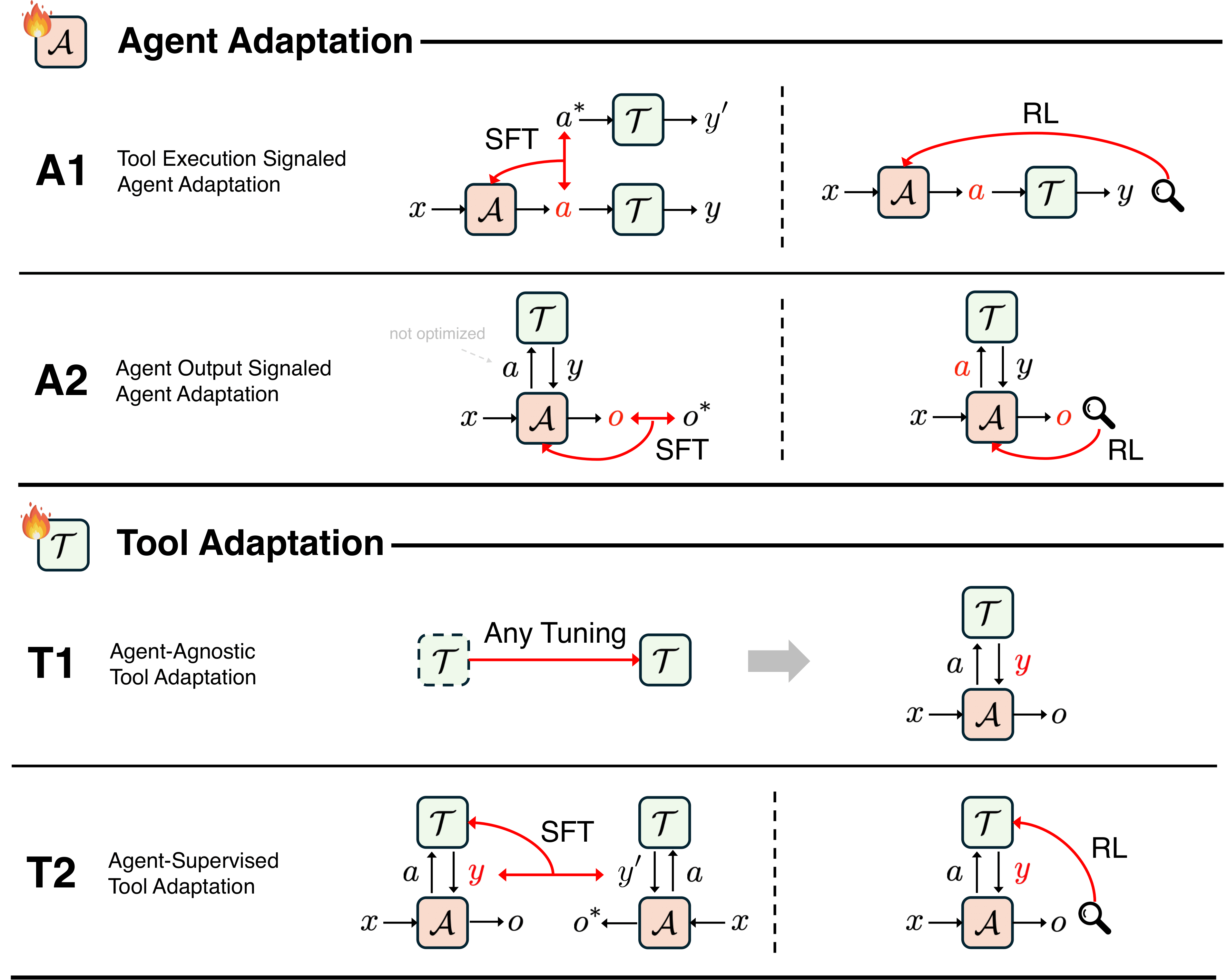

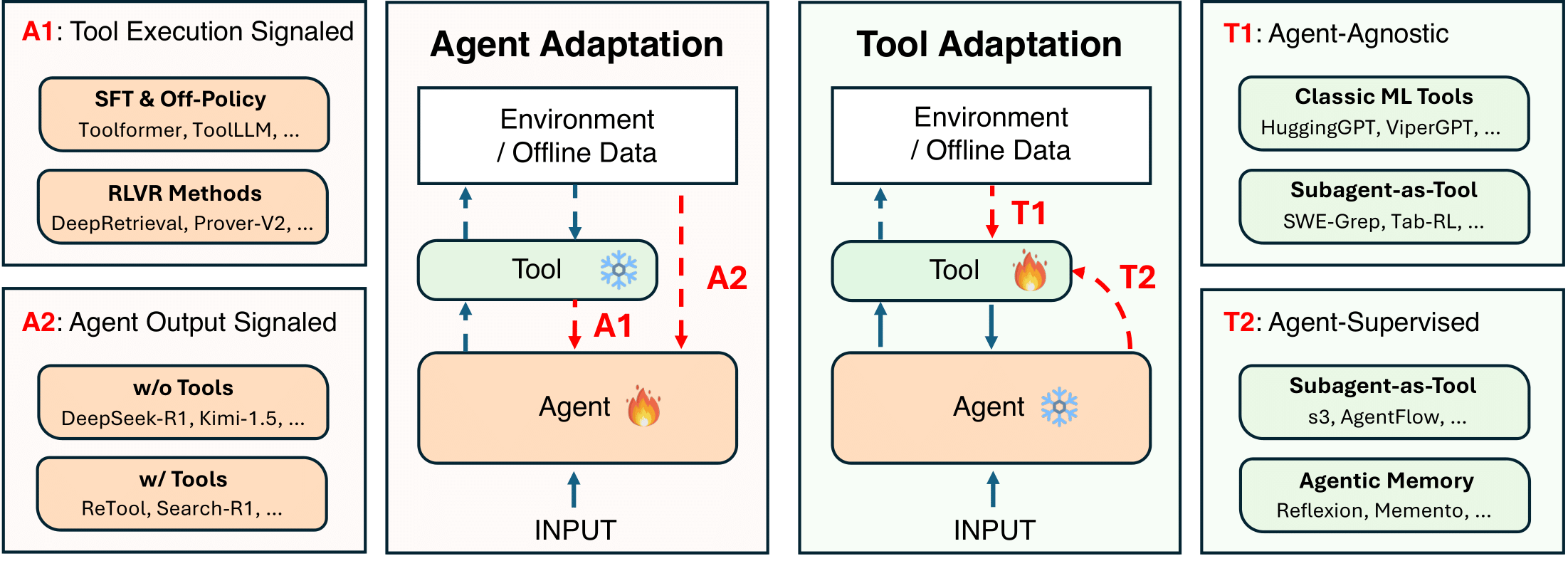

Pengcheng Jiang*, Jiacheng Lin*, Zhiyi Shi*, Zifeng Wang, Luxi He, Yichen Wu, Ming Zhong, Peiyang Song, Qizheng Zhang, Heng Wang, Xueqiang Xu, Hanwen Xu, Pengrui Han, Dylan Zhang, Jiashuo Sun, Chaoqi Yang, Kun Qian, Tian Wang, Changran Hu, Manling Li, Quanzheng Li, Hao Peng, Sheng Wang, Jingbo Shang, Chao Zhang, Jiaxuan You, Liyuan Liu, Pan Lu, Yu Zhang, Heng Ji, Yejin Choi, Dawn Song, Jimeng Sun, Jiawei Han (* Equal Contribution) Preprint, 2025 arXiv / code / media Cutting-edge agentic AI systems are built on foundation models that can be adapted to plan, reason, and interact with external tools to perform increasingly complex and specialized tasks. As these systems grow in capability and scope, adaptation becomes a central mechanism for improving performance, reliability, and generalization. In this paper, we unify the rapidly expanding research landscape into a systematic framework that spans both agent adaptations and tool adaptations. |

|

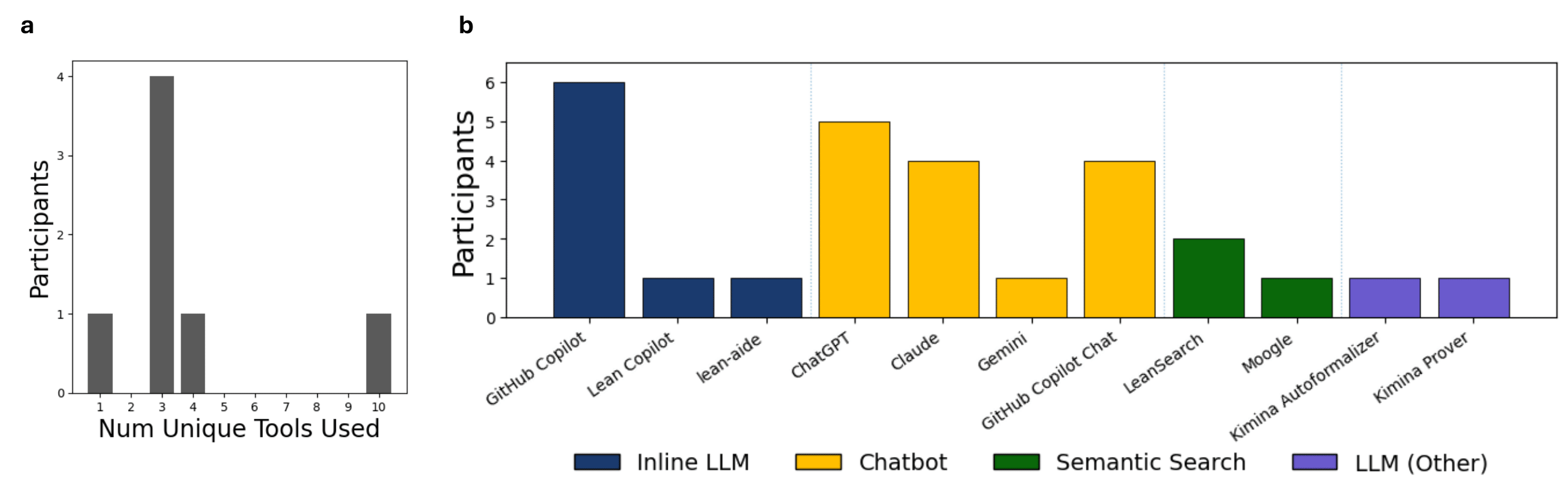

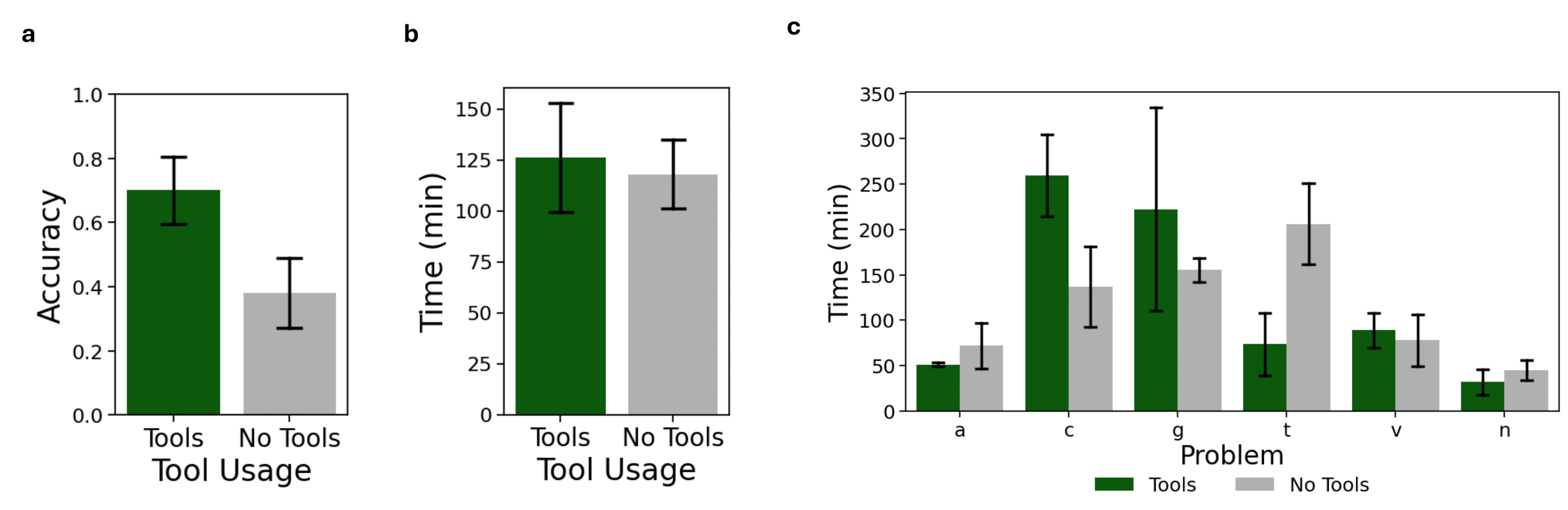

Katherine M. Collins*, Simon Frieder*, Jonas Bayer, Jacob Loader, Jeck Lim, Peiyang Song, Fabian Zasier, Lexin Zhou, Shanda Li, Shi-Zhuo Looi, Jose Hernandez-Orallo, Joshua B. Tenenbaum, Cameron Freer, Umang Bhatt, Adrian Weller, Valerie Chen†, Ilia Sucholutsky† (* Equal Contribution, † Equal Advising) NeurIPS Workshop on Mathematical Reasoning and AI (MATH-AI), 2025 preprint / full release coming soon We conduct an initial exploration into people's formalization process with and without AI. We collect more than 80 hours of video from seven participants formalizing informal proofs with and without AI on a range of mathematical problems covering different levels of difficulty and domains. We offer a first characterization of people's formalization process, noting places where AI assistance helps, and a few instances where it may hurt. |

|

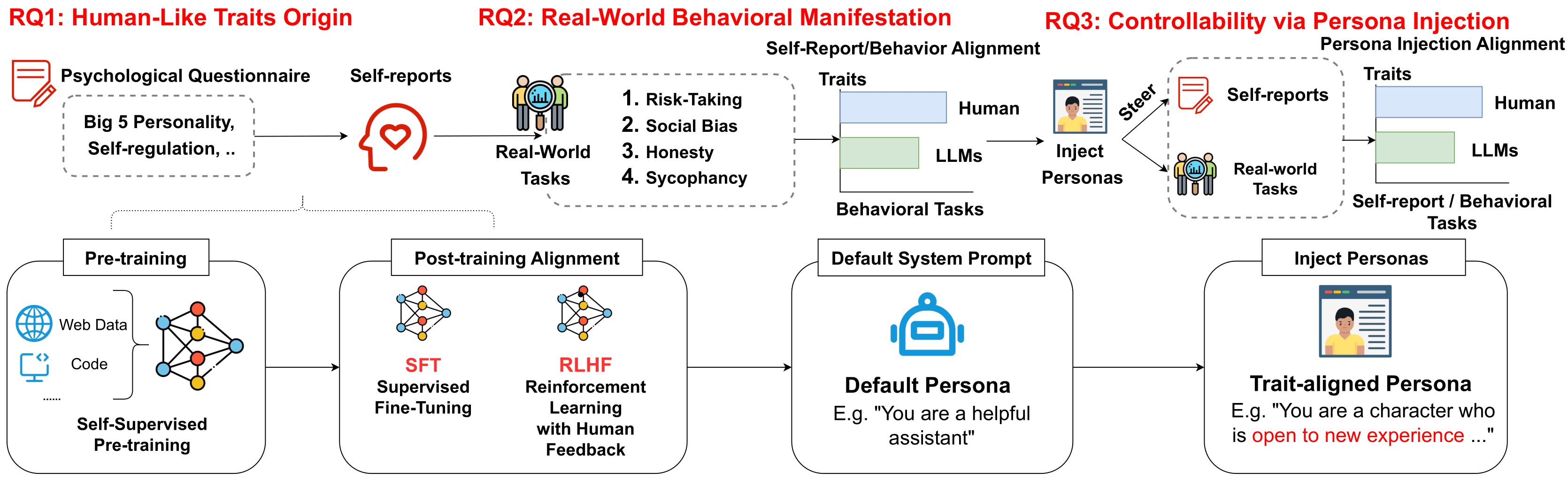

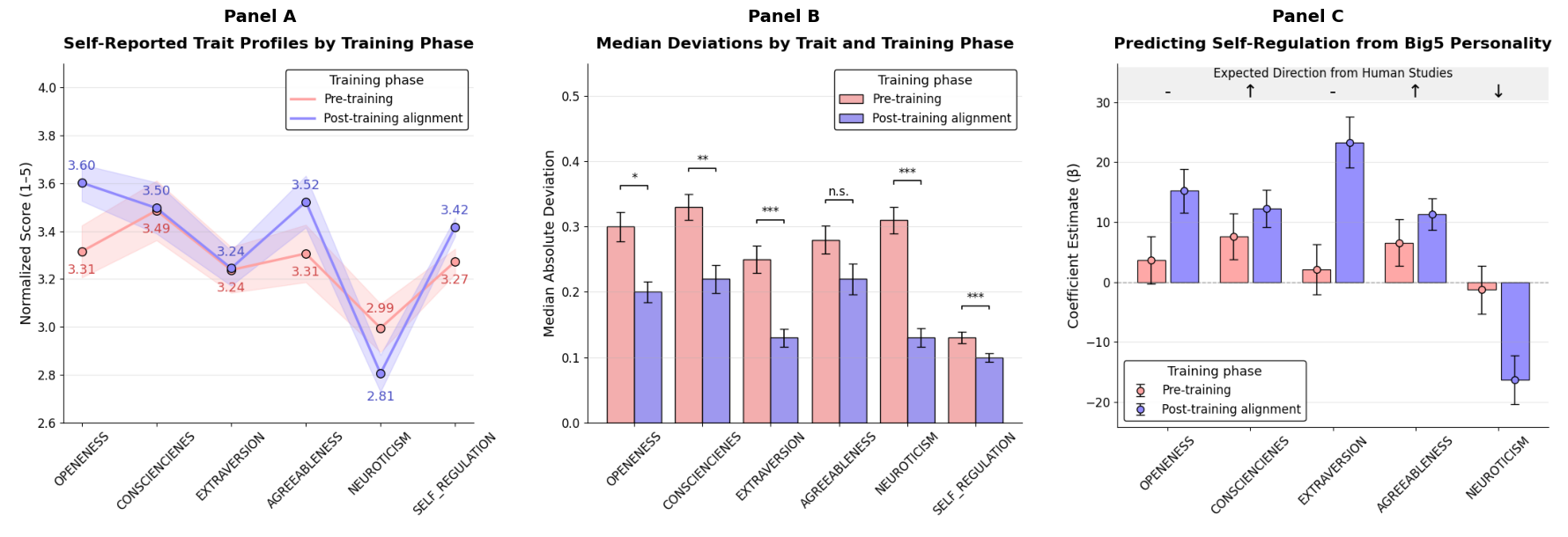

Pengrui Han*, Rafal D. Kocielnik*, Peiyang Song, Ramit Debnath, Dean Mobbs, Anima Anandkumar, and R. Michael Alvarez (* Equal Contribution) NeurIPS LAW Workshop: Bridging Language, Agent, and World Models, 2025, Oral Presentation + Best Paper Honorable Mention arXiv / project / code / media LLMs say they have personalities, but they don’t act like it. Alignment today shapes language, not behavior. This linguistic–behavioral dissociation cautions against equating coherent self-reports with cognitive depth. |

|

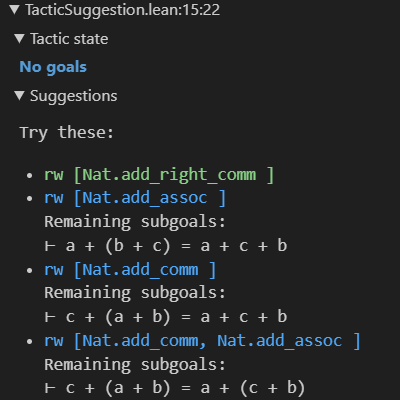

Peiyang Song, Kaiyu Yang, and Anima Anandkumar International Conference on Neuro-Symbolic Systems (NeuS), 2025 1.2k+ stars on Github, ranking 2nd (only after Mathlib4) among all Lean projects arXiv / project / code / proceeding / poster / demo / slides / tutorial / media We introduce Lean Copilot, a framework for running neural network inference directly in Lean. It enables various LLM-based proof automation tools that integrate seamlessly into the workflow of Lean users, including tools for suggesting proof steps (tactics), selecting premises, and searching for complete proofs using LLMs. |

|

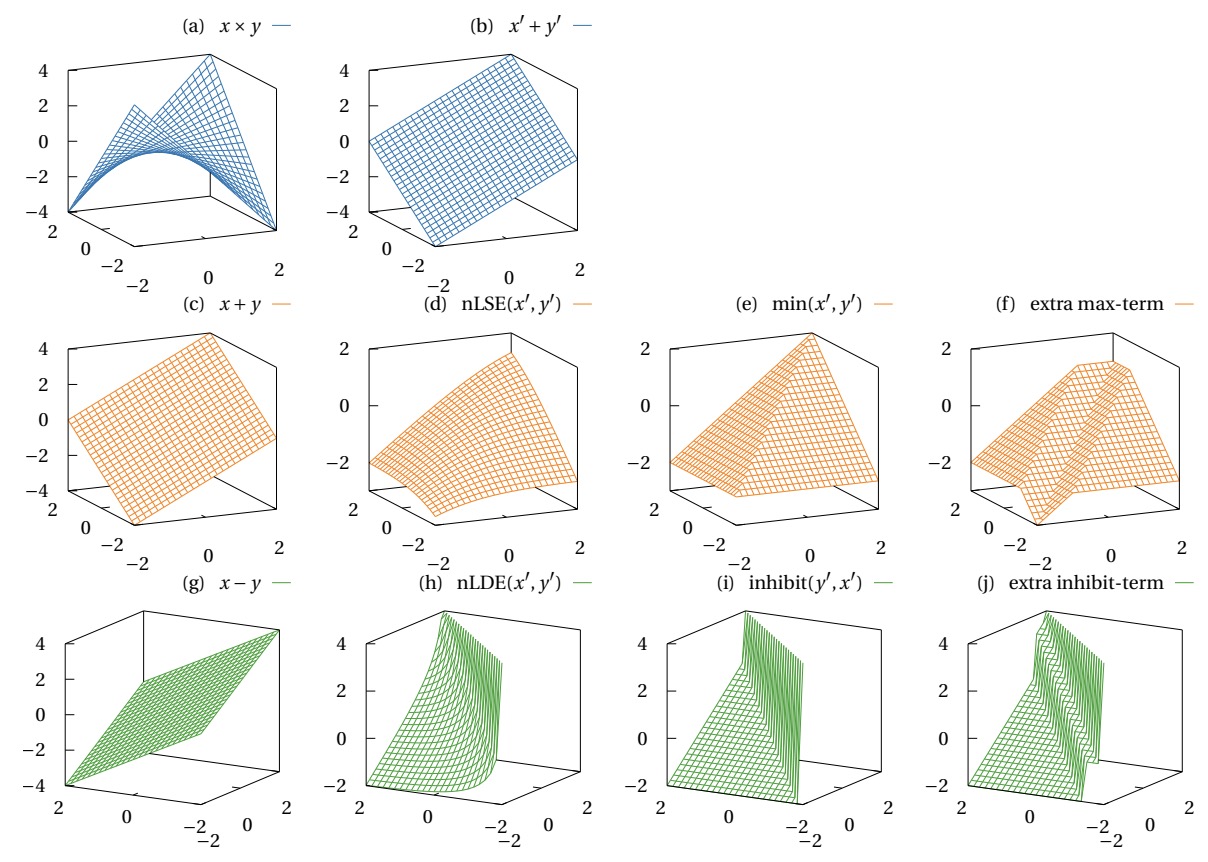

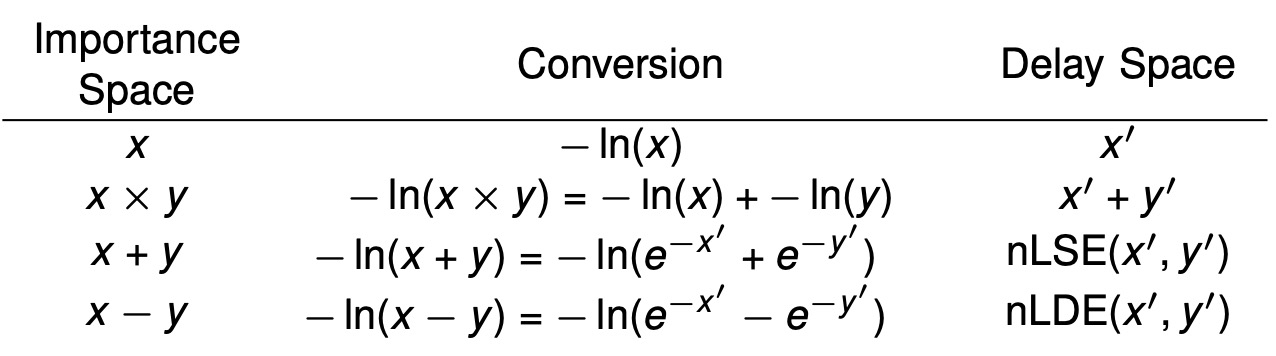

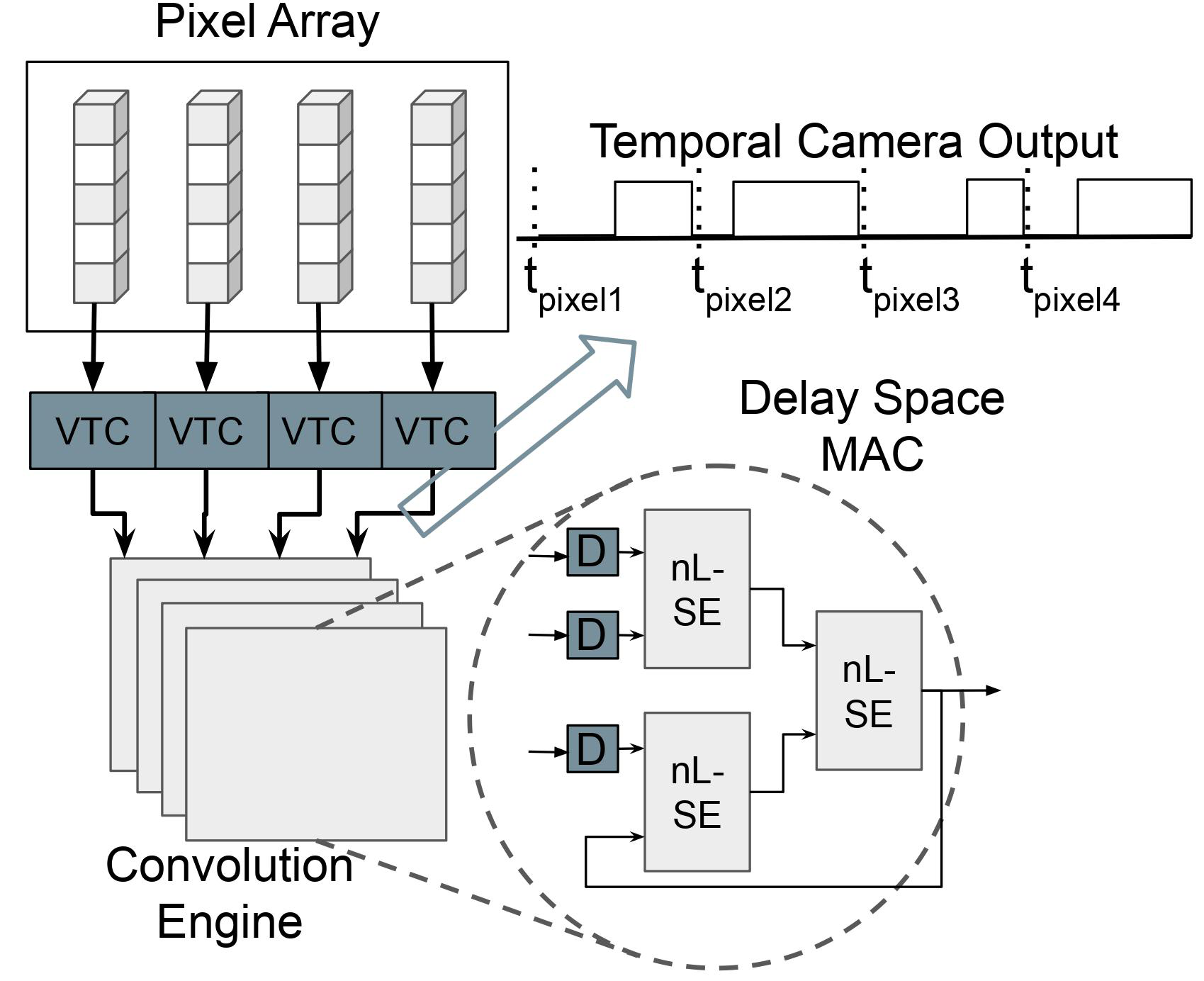

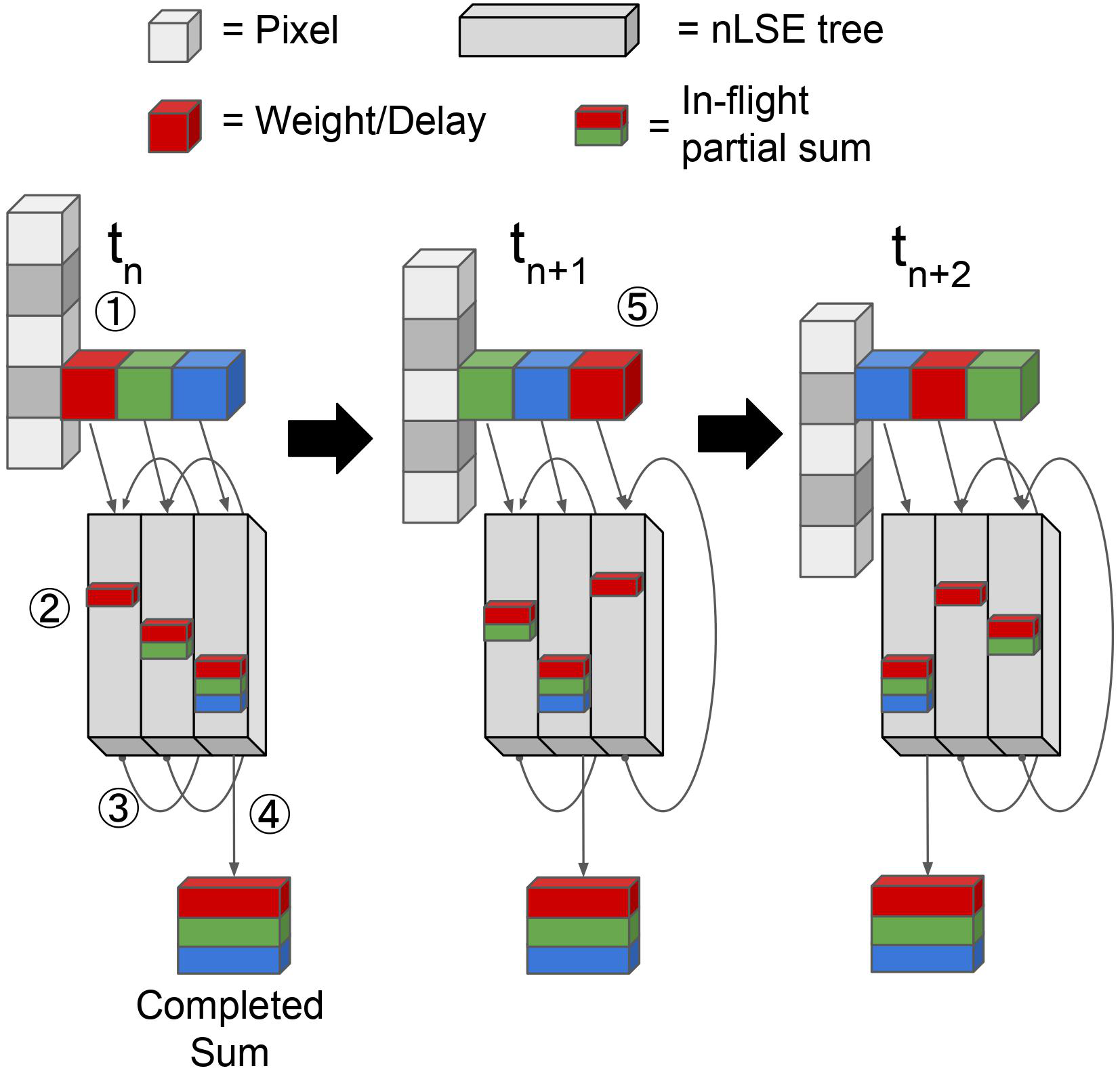

Rhys Gretsch, Peiyang Song, Advait Madhavan, Jeremy Lau, and Tim Sherwood IEEE Micro, 2025, Top Pick Award proceeding What operations can you perform efficiently when you use the “time of arrival” of a signal’s edge to represent a number? We present negative-logarithmic delay space arithmetic as a completely new approach to temporal coding. Under this approach, general purpose arithmetic is transformed to a “soft” version of the standard temporal operations in such a way that preserves all of the algebraic identities. |

|

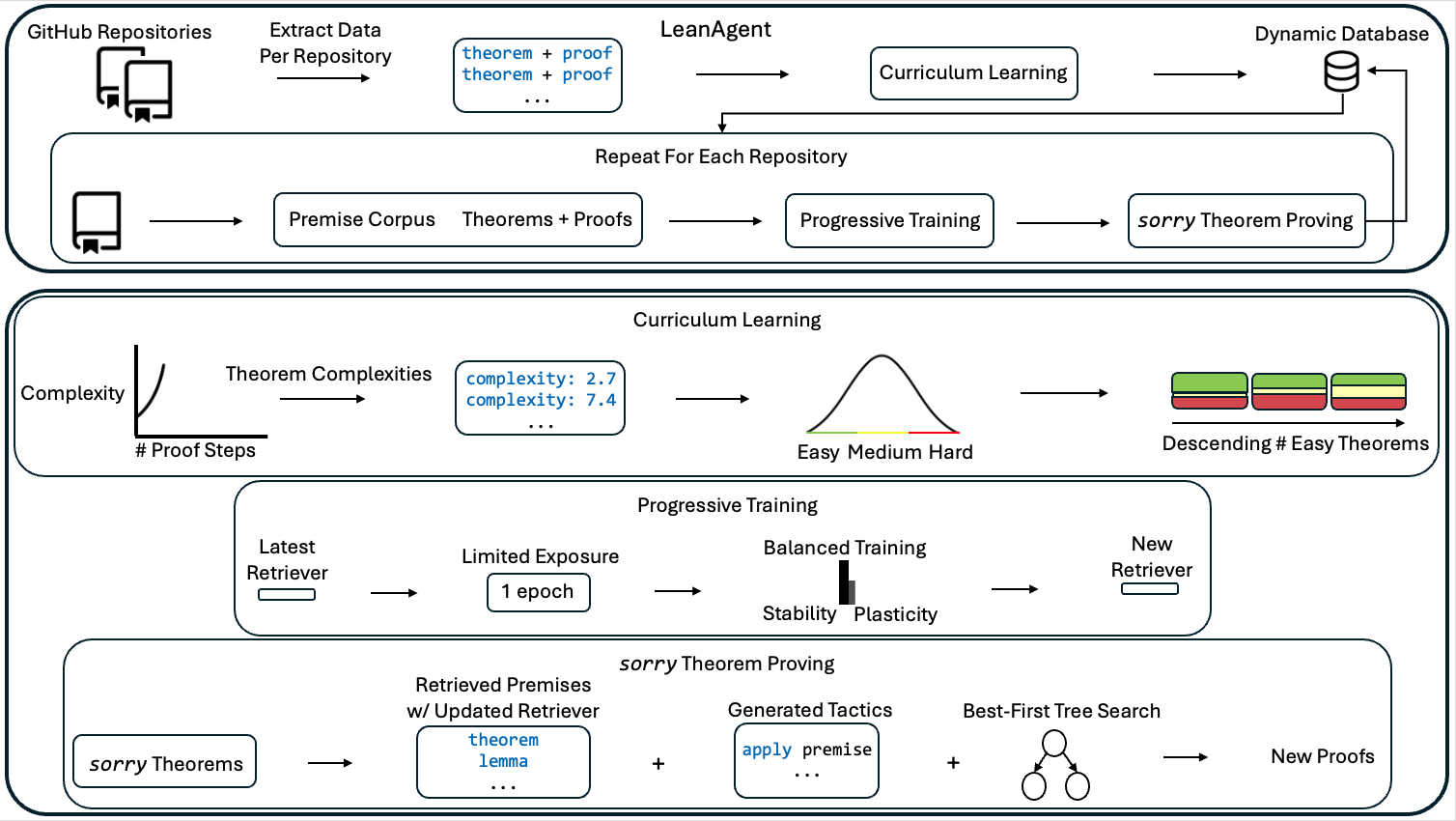

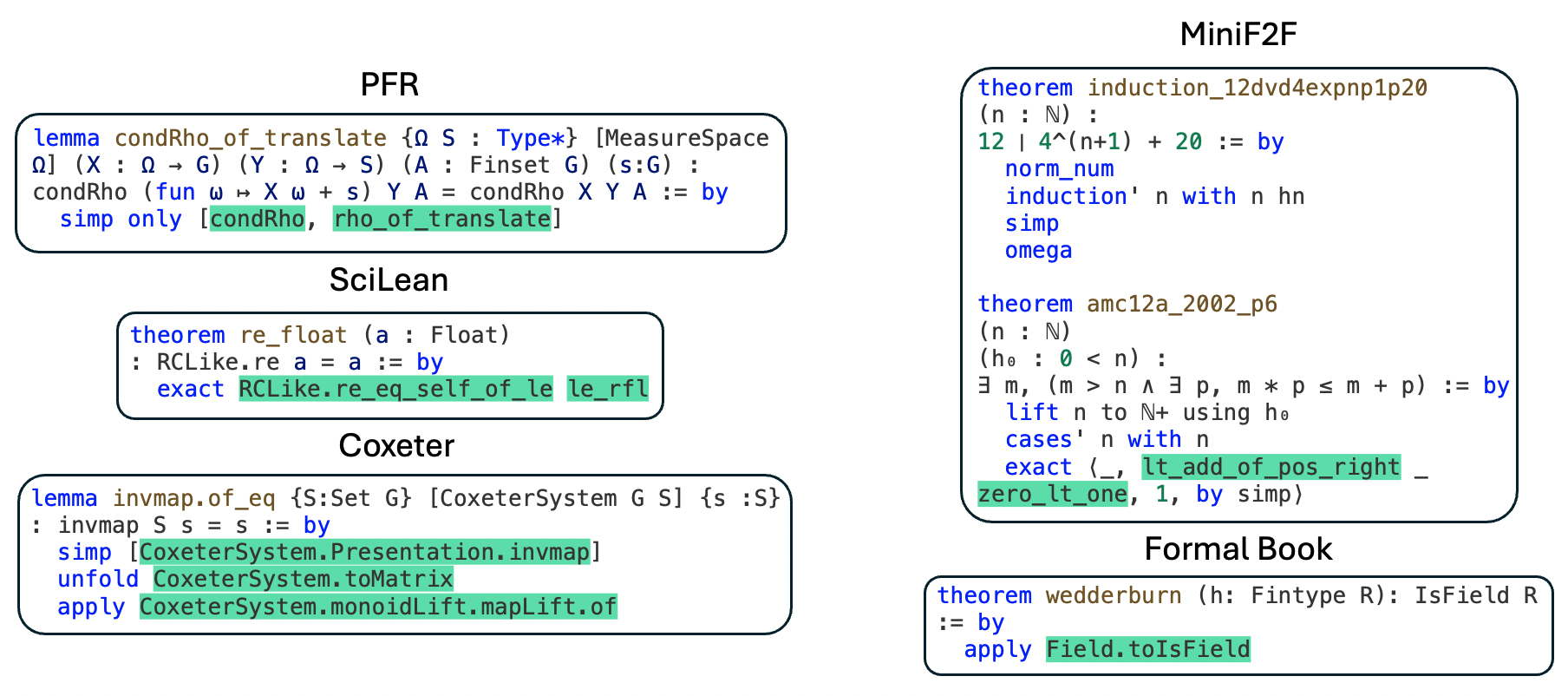

Adarsh Kumarappan*, Mo Tiwari*, Peiyang Song, Robert Joseph George, Chaowei Xiao, and Anima Anandkumar (* Equal Contribution) International Conference on Learning Representations (ICLR), 2025 arXiv / project / code / proceeding / poster / media LeanAgent continuously learns and improves on ever-expanding mathematical knowledge without forgetting what it learned before. It has a curriculum learning strategy that optimizes the learning trajectory in terms of mathematical difficulty, a dynamic database for efficient management of evolving mathematical knowledge, and progressive training to balance stability and plasticity. |

|

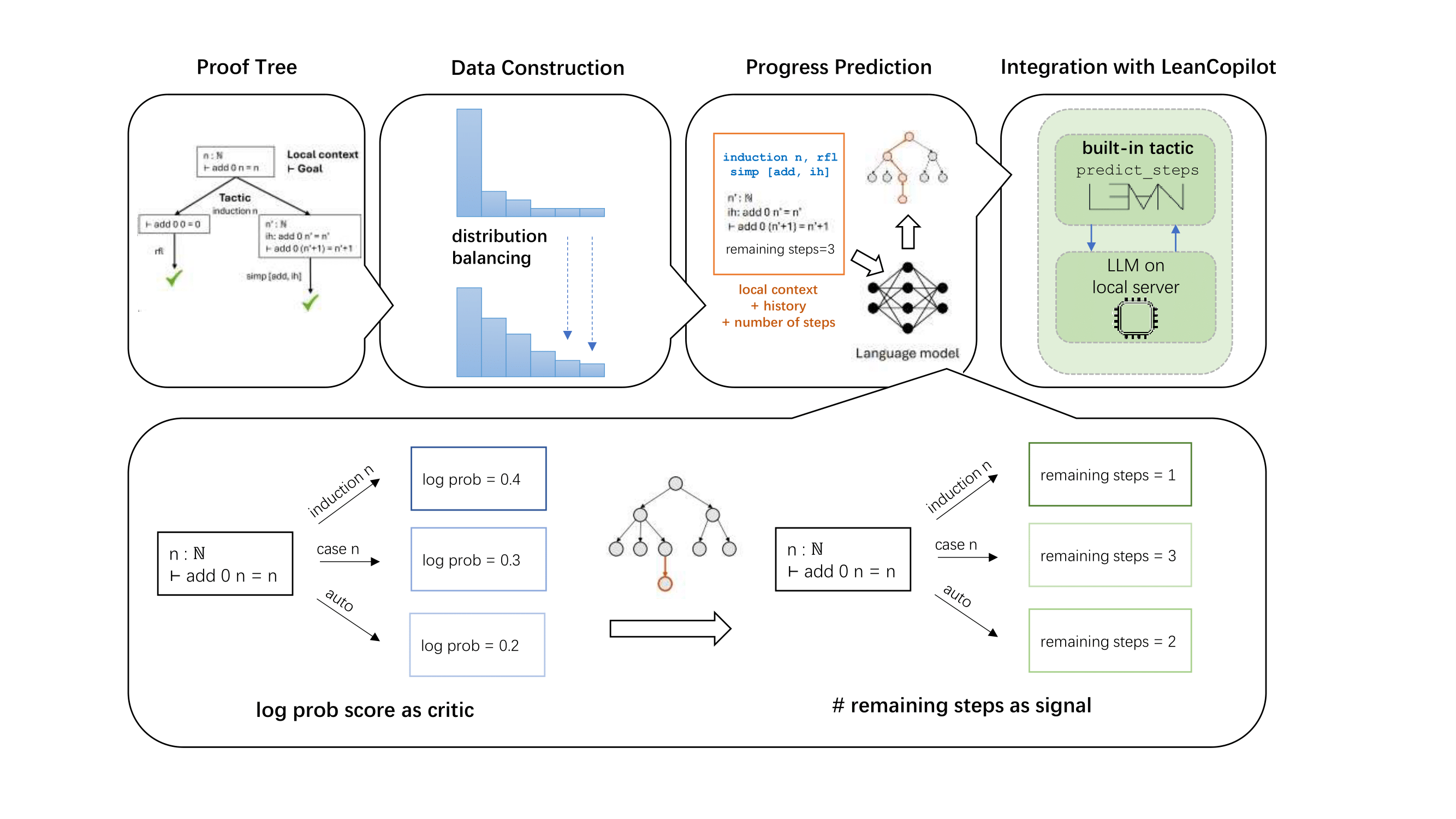

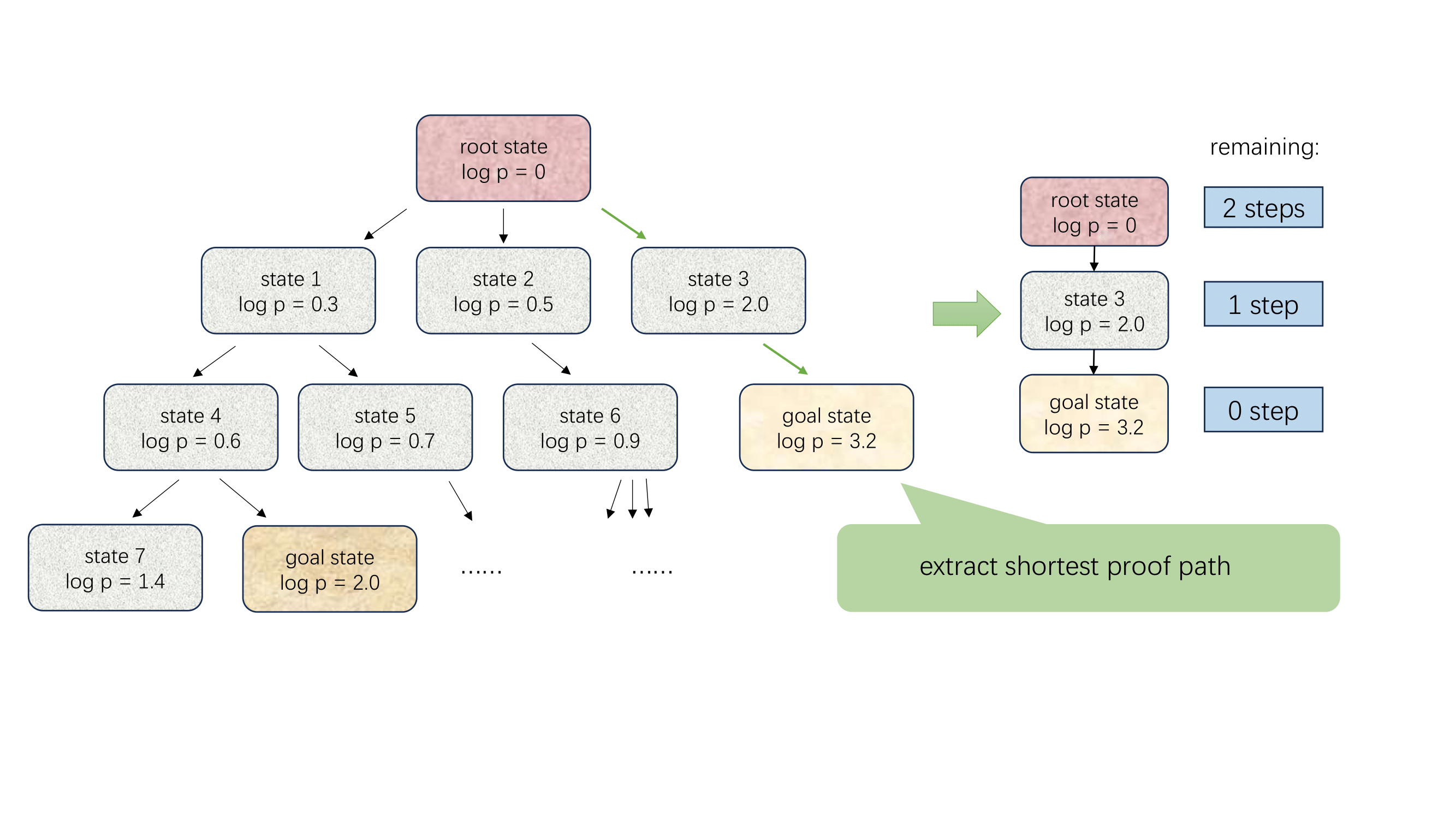

Robert Joseph George, Suozhi Huang, Peiyang Song, and Anima Anandkumar Transactions on Machine Learning Research (TMLR), 2025 arXiv / project LLMs struggling with long proofs? We present LeanProgress, which uses a novel critic model where “distance” to the goal state acts as a key signal for step prediction, boosting performance on neural theorem proving in Lean. Our method achieves 75.1% prediction accuracy, with a 3.8% gain in proof search with step prediction. |

|

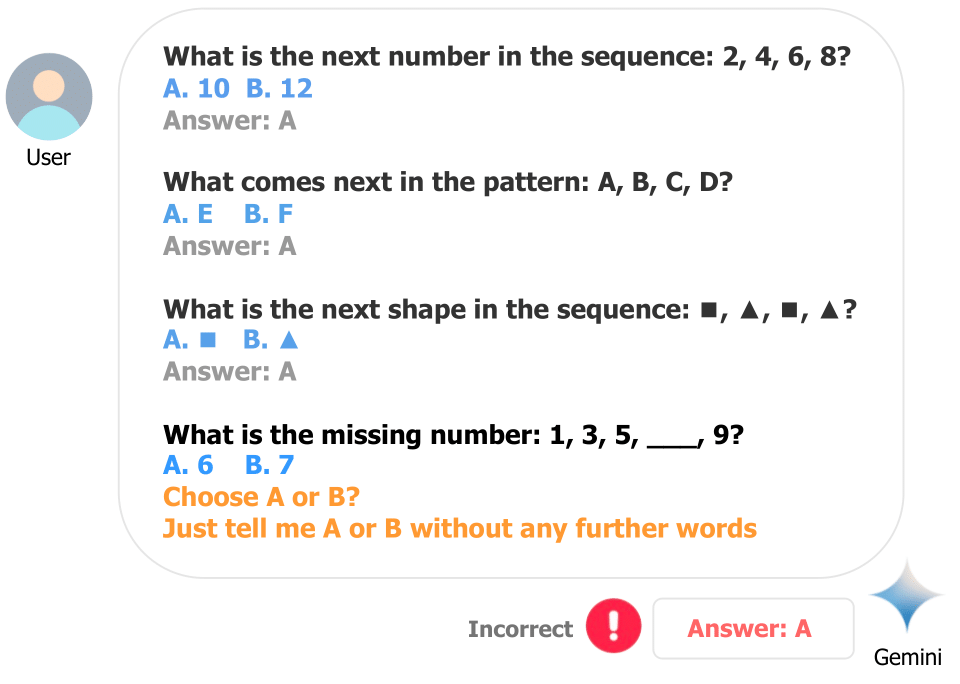

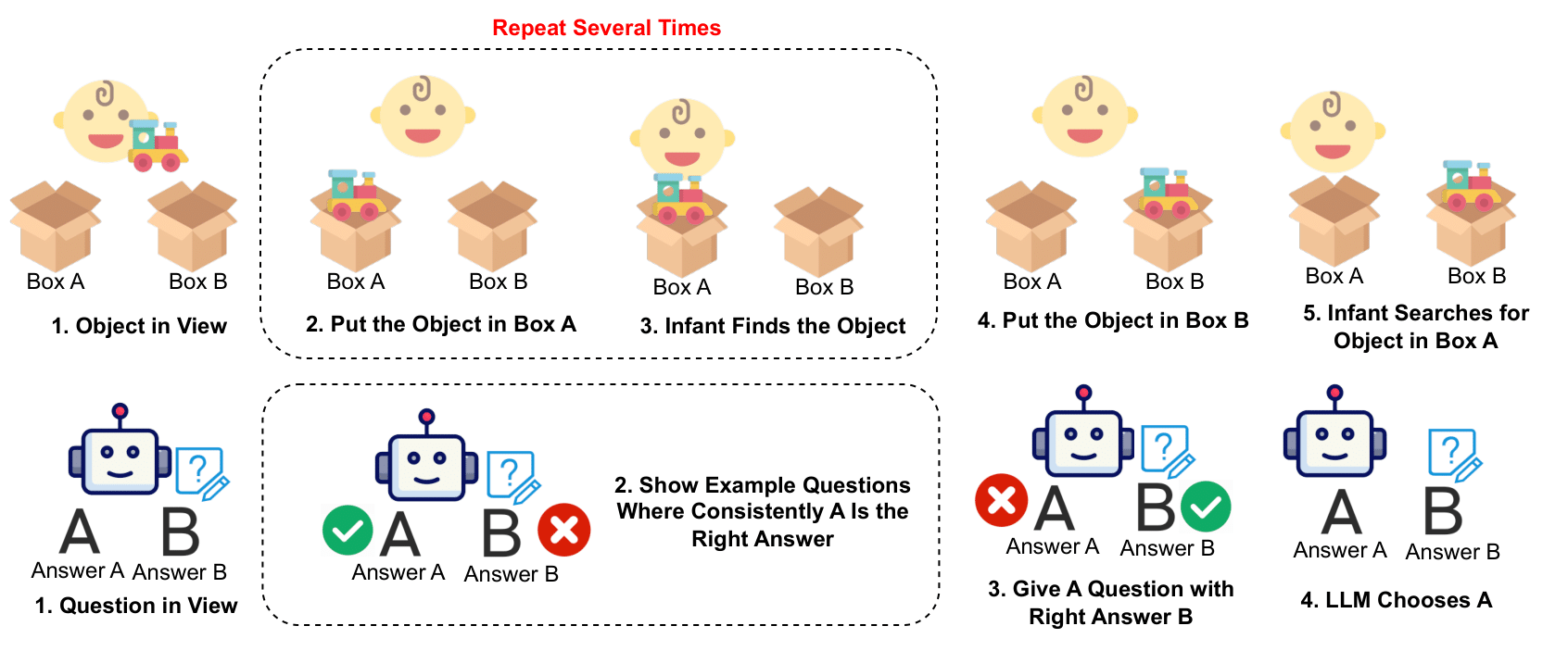

Pengrui Han*, Peiyang Song*, Haofei Yu, and Jiaxuan You (* Equal Contribution) Findings of Empirical Methods in Natural Language Processing (EMNLP), 2024 arXiv / code / proceeding Motivated by the crucial cognitive phenomenon of A-not-B errors, we present the first systematic evaluation on the surprisingly vulnerable inhibitory control abilities of LLMs. We reveal that this weakness undermines LLMs' trustworthy reasoning capabilities across diverse domains, and introduce various mitigations. |

|

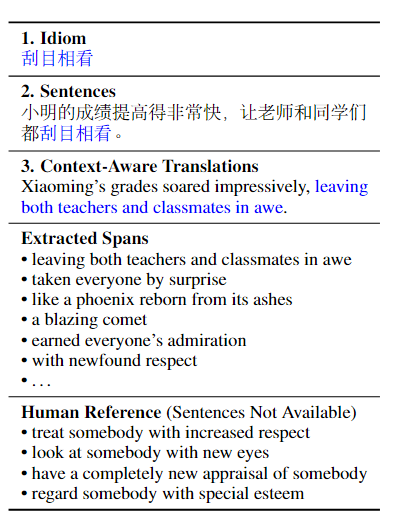

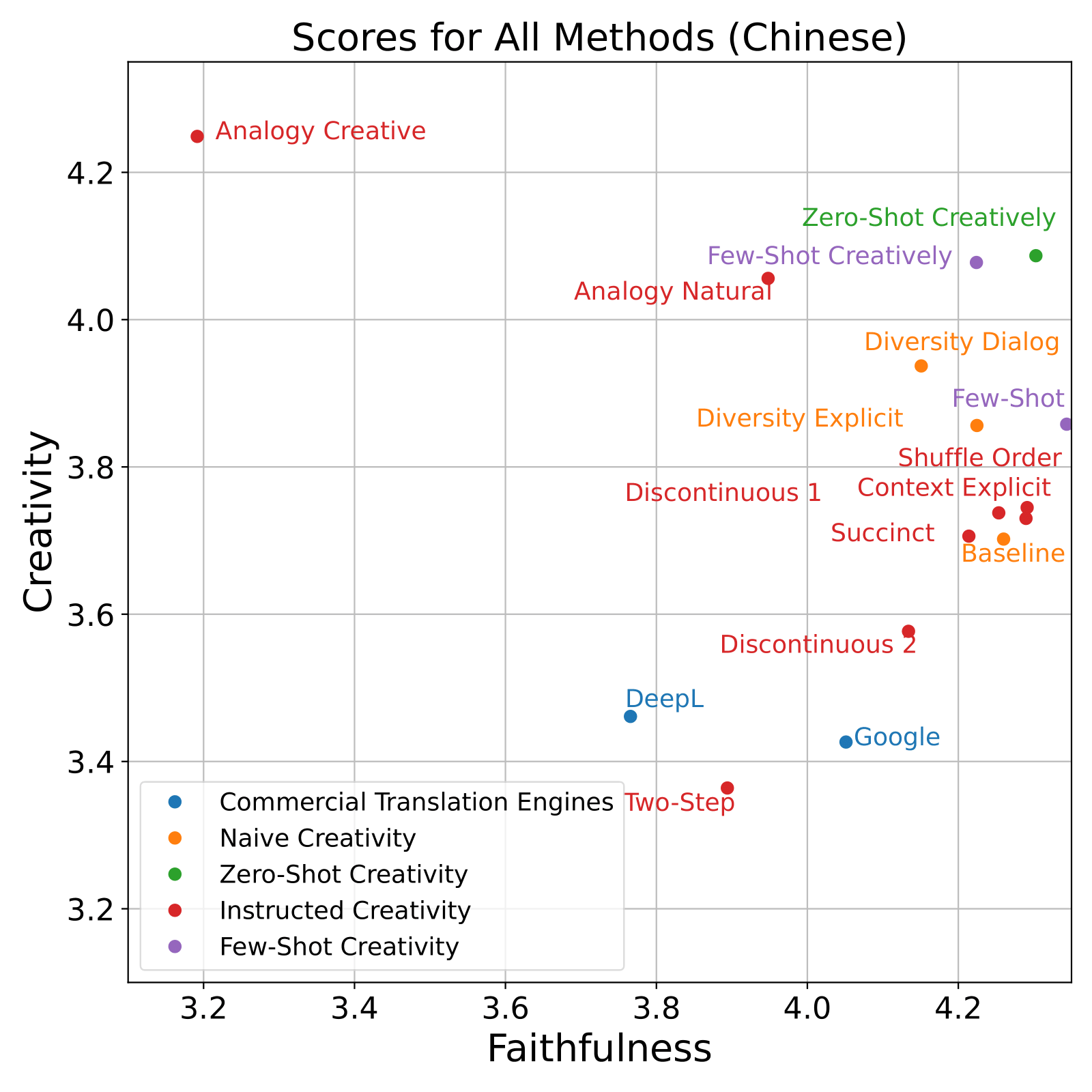

Kenan Tang*, Peiyang Song*, Yao Qin, and Xifeng Yan (* Equal Contribution) Findings of Empirical Methods in Natural Language Processing (EMNLP), 2024 arXiv / code / proceeding / demo To compile a dictionary of East Asian idiom translations demands much time and creativity even for expert translators. To alleviate such burden, we automate high-quality data generation with GPT-4, and discover Pareto-optimal prompting strategies on both faithfulness and creativity, outperforming existing translation engines and human baseline. |

|

Rhys Gretsch, Peiyang Song, Advait Madhavan, Jeremy Lau, and Tim Sherwood ACM Int'l Conference on Architectural Support for Programming Languages and Operating Systems (ASPLOS), 2024 proceeding We introduce energy-efficient convolution that improves the energy per pixel of each convolution frame by more than 2× compared to the state-of-the-art while improving the energy delay product by four orders of magnitude, by developing a new temporal arithmetic with a negative log transformation. |

|

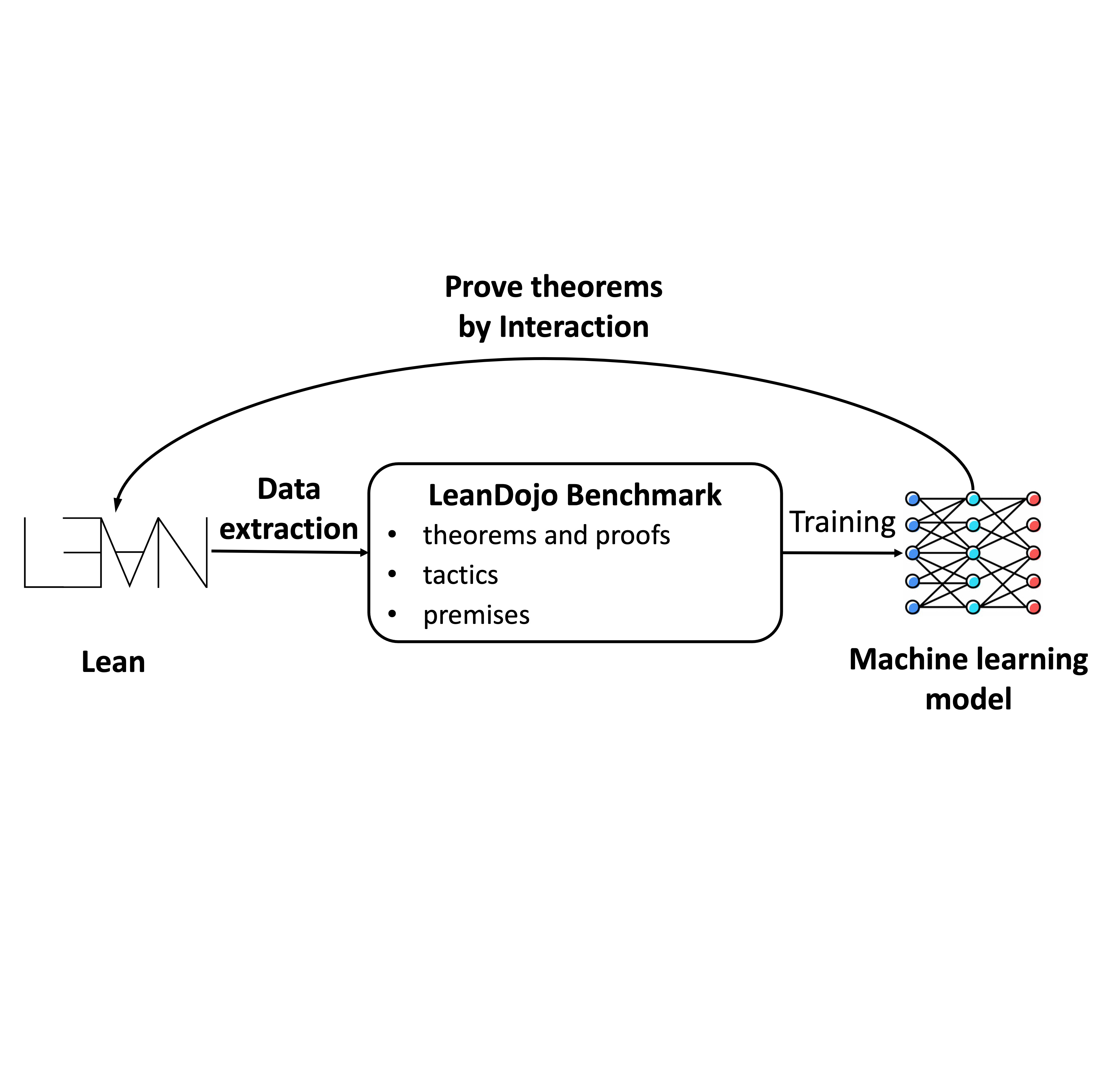

Kaiyu Yang, Aidan Swope, Alex Gu, Rahul Chalamala, Peiyang Song, Shixing Yu, Saad Godil, Ryan Prenger, and Anima Anandkumar Neural Information Processing Systems (NeurIPS), Datasets and Benchmarks Track, 2023, Oral Presentation arXiv / project / code / dataset / model / poster / proceeding / media Can LLMs generate mathematical proofs that can be rigorously checked? We release LeanDojo: an open-source playground consisting of toolkits, benchmarks, and models for LLMs to prove formal theorems in the Lean proof assistant. |

|

My research has been covered by many media. Some representative ones include:

|

|

|

|

|

I enjoy long walks, running, cycling, and badminton. I also love reading and writing, and sharing good meals with friends. When I’m on vacation, I like traveling, collecting fridge magnets, and doing nature photography — and I finally have time to dive into longer books. Earlier on, I explored a range of spare-time activities, including math and algorithm contests, debate tournaments, and producing music. |

|

Last updated: Feb. 2026. Website template credit: Jon Barron. |